Study and Update on GenAI DevEx

Internal GenAI developer tools, and learnings about vendors

Written by Vishruth Ashok (Dev Platform) and Brian Attwell. Input from Alex Filipchik, Michael Li, and many others

At CloudKitchens, we’ve been proactively investing in ways to accelerate our productivity and improve our Developer Experience (DevEx) with internal and externally built GenAI tools (section: Comparison of GenAI Vendors), even though it is clear that many of the industry opinions on this tech are overblown hype (section: Marketing Hype).

Our internal studies have shown that coding assistants have had persistent categorical limitations over time. Yet subjectively, their usability and effectiveness is increasing. Engineers with high baseline productivity report a sustained median weekly savings of 3 hours and bursts of larger savings when using off-the-shelf GenAI tools (section: Productivity Impact by Use-Case). Deep dives into engineers’ workflows corroborated this median uplift.

We were initially concerned that these tools would lead to decreased reliability. We’re finding little evidence of this downside as usage continues amongst seasoned engineers (section: Adverse Quality Impact Not Found).

This blog post will cover how we are pragmatically vetting and evaluating these tools and driving widespread adoption to accelerate our developer base.

The space evolves rapidly, with new players and tools popping up seemingly every month. There is not a clear winner in the market for GenAI coding assistants, and there appears room for disruption of the current market leaders. When we build GenAI into the core of our platforms to improve developer experience, we won’t do it in a way that couples us to any particular companies.

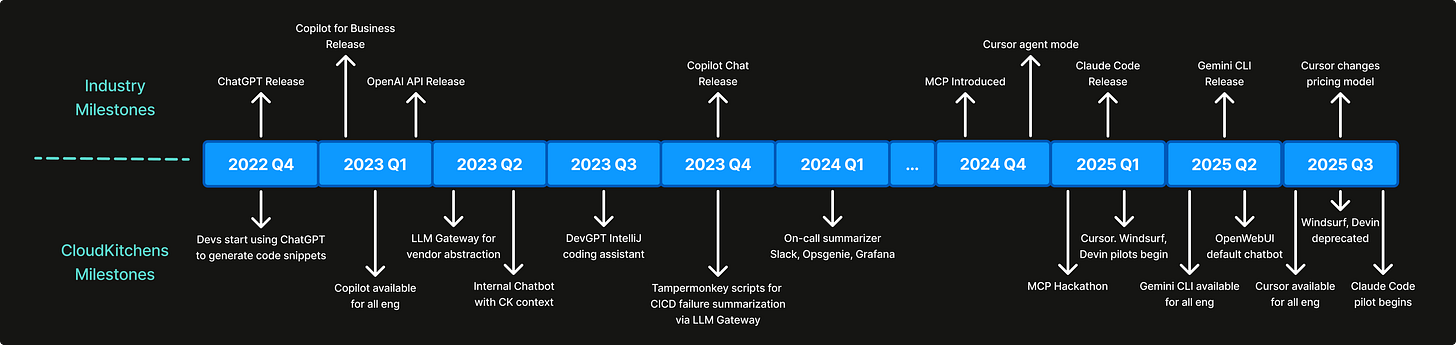

Our Journey So Far

We’ve been early and consistent adopters of new GenAI tools and capabilities. This extended to Developer Experience, which we illustrate in the figure below.

For example, early on, we built our LLM Gateway. Investing in this platform wrapper allowed all our engineers to interact with a variety of LLMs without spending time on the burdensome scaffolding of integrating with vendors while allowing us to manage rate limits and have fine-grained usage and cost reporting.

Marketing Hype

As we steadily integrate GenAI tools into our daily workflows, we’ve been noticing some other early adopters expressing extreme positions. This started raising questions for us.

Massive amounts of useful code generated by “10x engineers” - Source

The age of superbuilders has begun - Source

Strong top-down AI-usage mandates - Source

With so much hype, we realized we needed to immerse ourselves and perform our own study to uncover the truth. We summarize some of our findings in further sections.

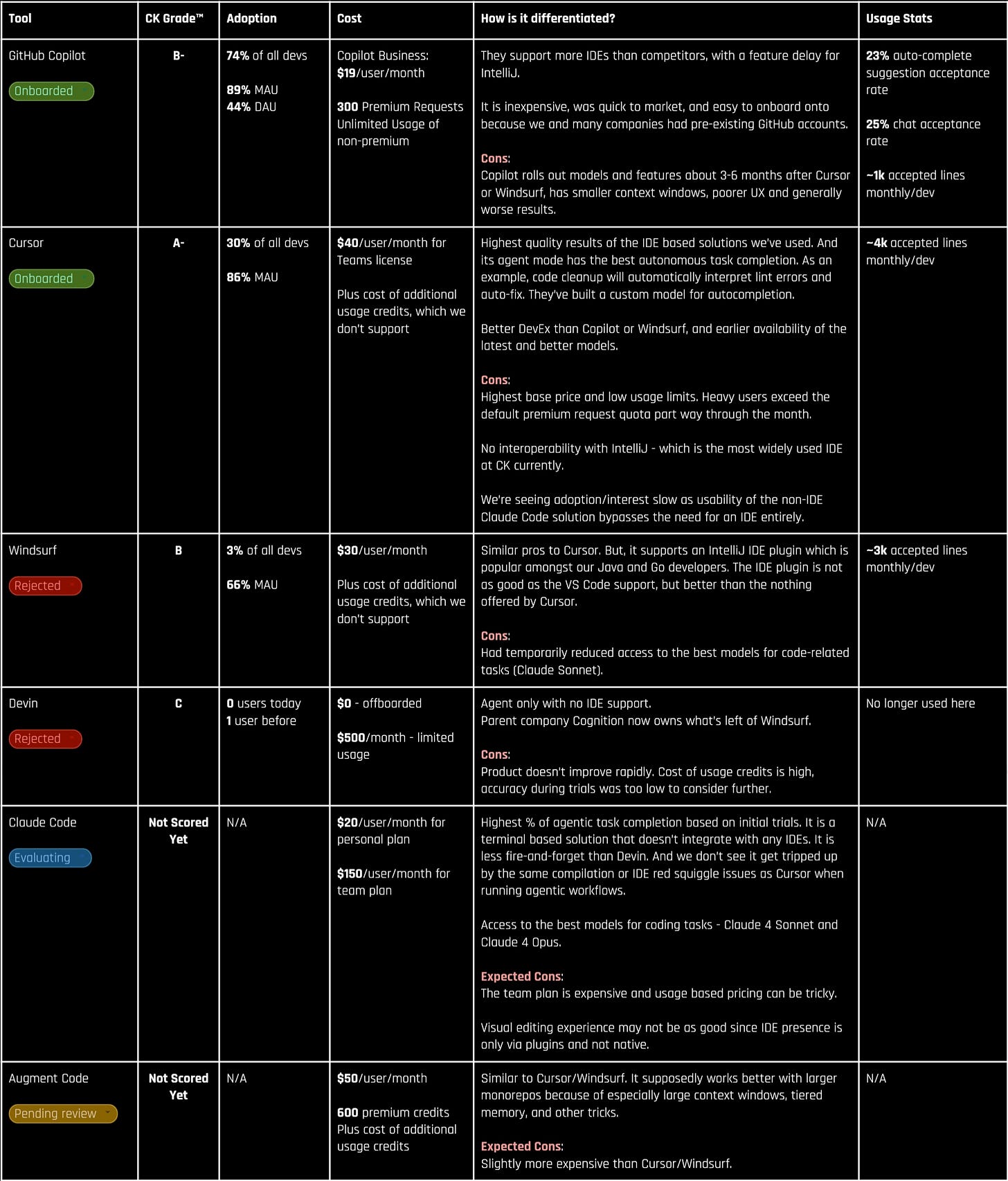

Comparison of GenAI Vendors @ CloudKitchens

We continuously reassess our tools and strategies to stay on top of newer capabilities and opportunities. We hold off widely rolling out tools before they’re useful so engineers aren’t put off by negative first impressions. Below is our latest assessment as of August 2025.

The CK Grade™ is a relative score, with A being the maximum value. There is no A+ since “There’s always room for improvement” according to my high school English teacher.

Since the developer satisfaction has been high with these tools, driving adoption has been fairly organic without the requirement of any strong top-down mandates. We’ve run a couple lightweight AI enablement sessions, but developers see others using it effectively and being more productive, get curious and test it out themselves.

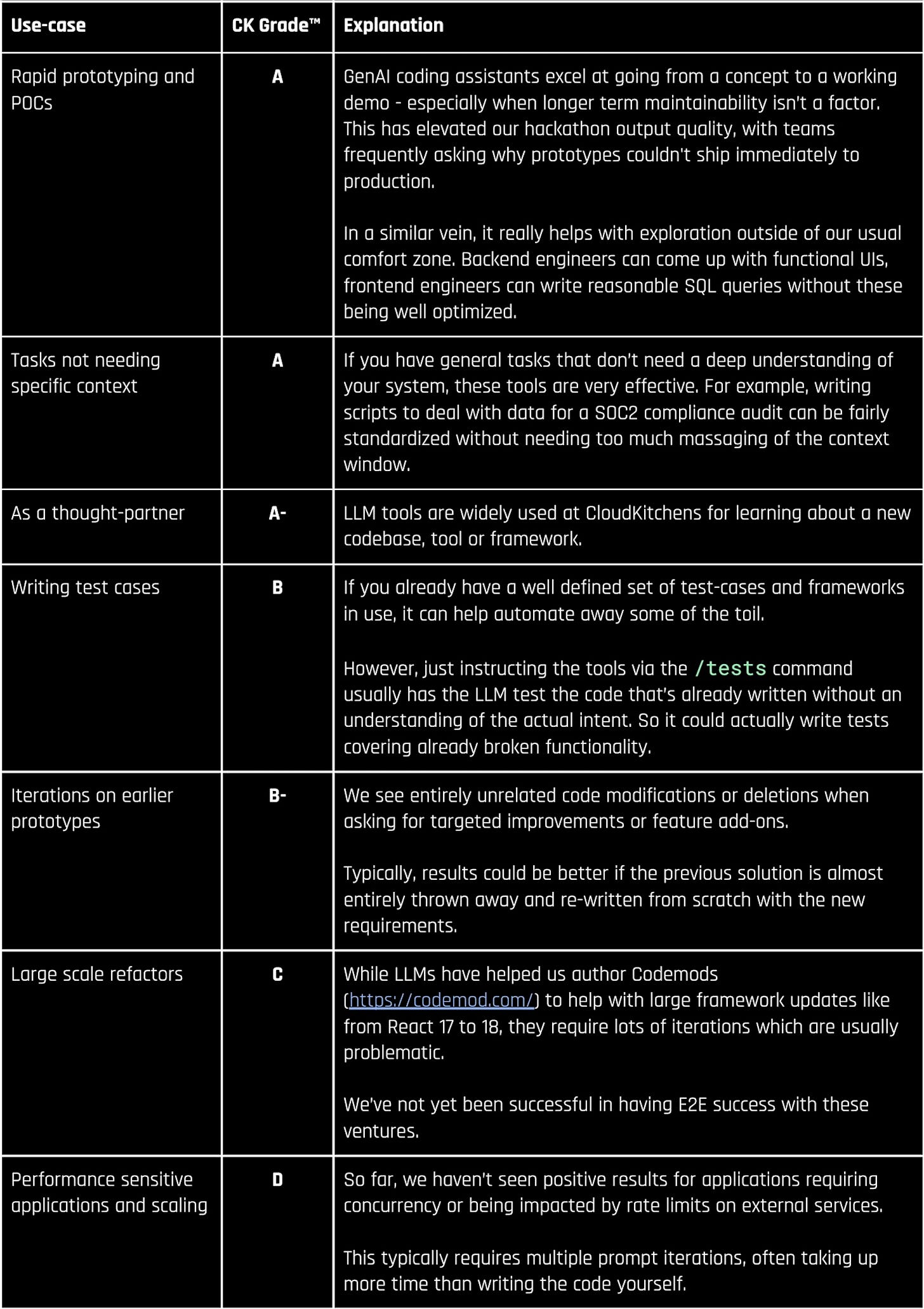

Productivity Impact by Use-Case

Our baseline engineering productivity is high. CK engineering candidates are mainly sourced from big tech or highly successful startups. And our interview pass rate is only 1-3% despite strong sourcing pedigree. Of these engineers, we only included engineers in our study group with 5+ years of coding experience.

We measure a basket of metrics and countermeasures to try and understand impact. No single measure is sufficient. For example, engineers who opted into using GenAI tools experienced a 10-15% uplift on lines of code shipped. But without the ability to control for participant enthusiasm, this single measure doesn’t clearly show causality. Nor is more LOC necessarily a good thing.

Therefore, we also kept tabs on SPACE metrics, DAU, MAU, # completions, self-reported time savings, and did a number of deep dives into individual engineering workflows to try and overcome self-reporting bias (see study).

Our conclusion from these composite signals is that our engineers are saving a median of 3h per week as a result of using GenAI DevEx tools like Cursor compared to a baseline of conventional development + ChatGPT usage. And the time saving varies widely based on activity. We corroborated this via individual deep-dives.

Notably, we have not been able to tie back any of these improvements to overall engineering project velocity. We believe that this is because active coding takes <25% of engineers time typically (see study). And even while actively writing code, a small subset of the time is typically spent on tasks where these tools shine.

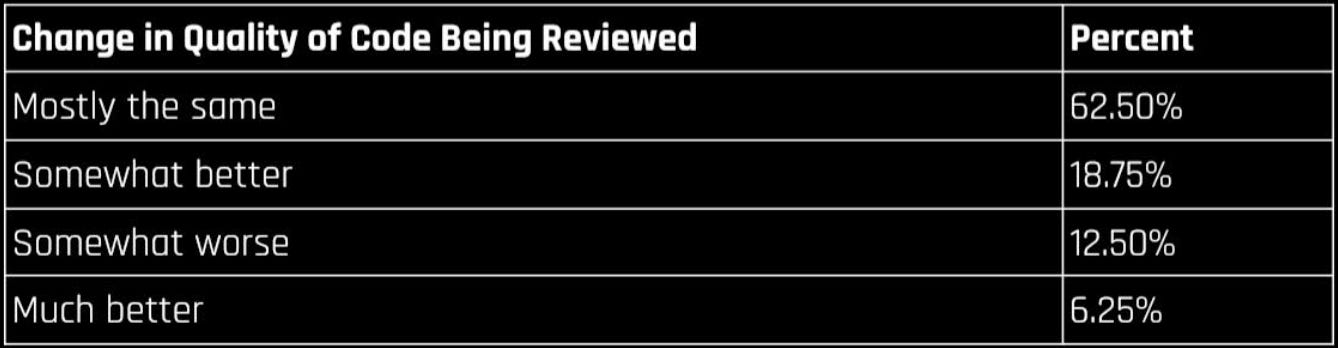

Adverse Quality Impact Not Found

GenAI-produced code can contain subtle bugs or shocking naivety that humans would not produce themselves. And, engineers find GenAI especially useful for generating code in domains they are unfamiliar with. For example, backend engineers using GenAI to generate frontend code. As a result, it’s easy to imagine that issues in code being produced by GenAI might slip undetected by the engineers using GenAI into our production code base.

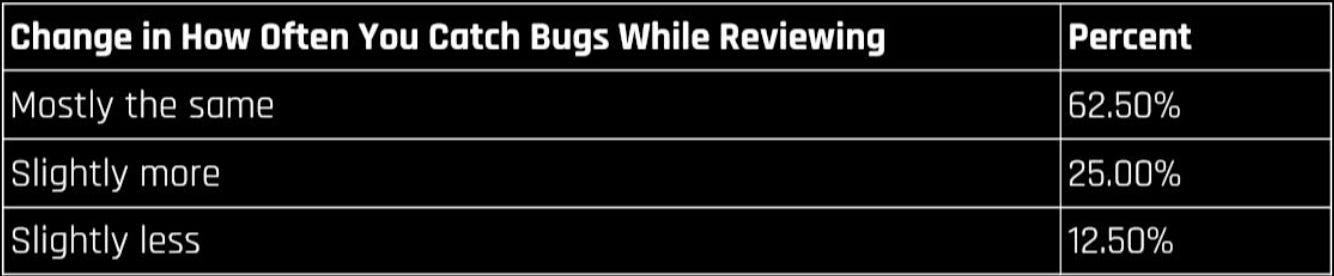

At this early stage, we haven’t seen evidence of quality issues impacting production (incidents, bugs, etc). To try and find other leading indicators of risk, we surveyed teammates of the heaviest GenAI users at the company.

We asked a number of related questions to try and uncover problems. The most common complaint: the frequency of seeing code with style mismatched from our codebase has increased. This seems minor and fixable.

We haven’t seen a serious trend of increased bugs being discovered during review or overall reviewer burden increasing.

Interesting GenAI Applications

There are some basic GenAI applications involving summarization and contextual chatbots that index relevant data from Confluence, Slack, Google Docs and GitHub.

We wanted to explore avenues beyond that. Here are some such use-cases that are already live.

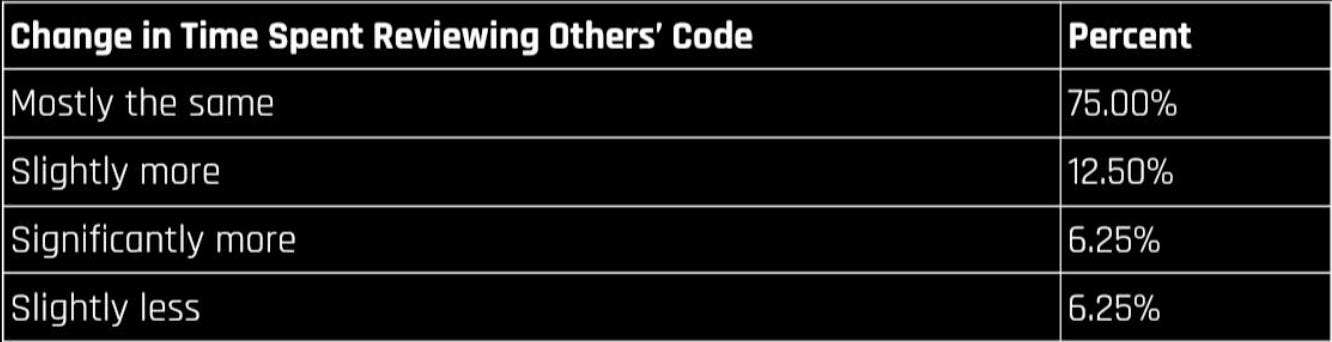

On-call AI

Instead of restricting GenAI to only code-writing, we also built an agent that queries observability data, metrics, and logs, providing engineers with crucial insights to quickly pinpoint and mitigate incidents. Early experiments demonstrate the agent's remarkable effectiveness, identifying incident root causes in one or two shots. Future improvements in this space will be evolving beyond the text-based chat interface, improved context management and custom fine-tuned LLM models specifically geared towards observability data.

The reason we can pull something like this off meaningfully is due to the past standardization efforts of our CICD processes and overall service observability.

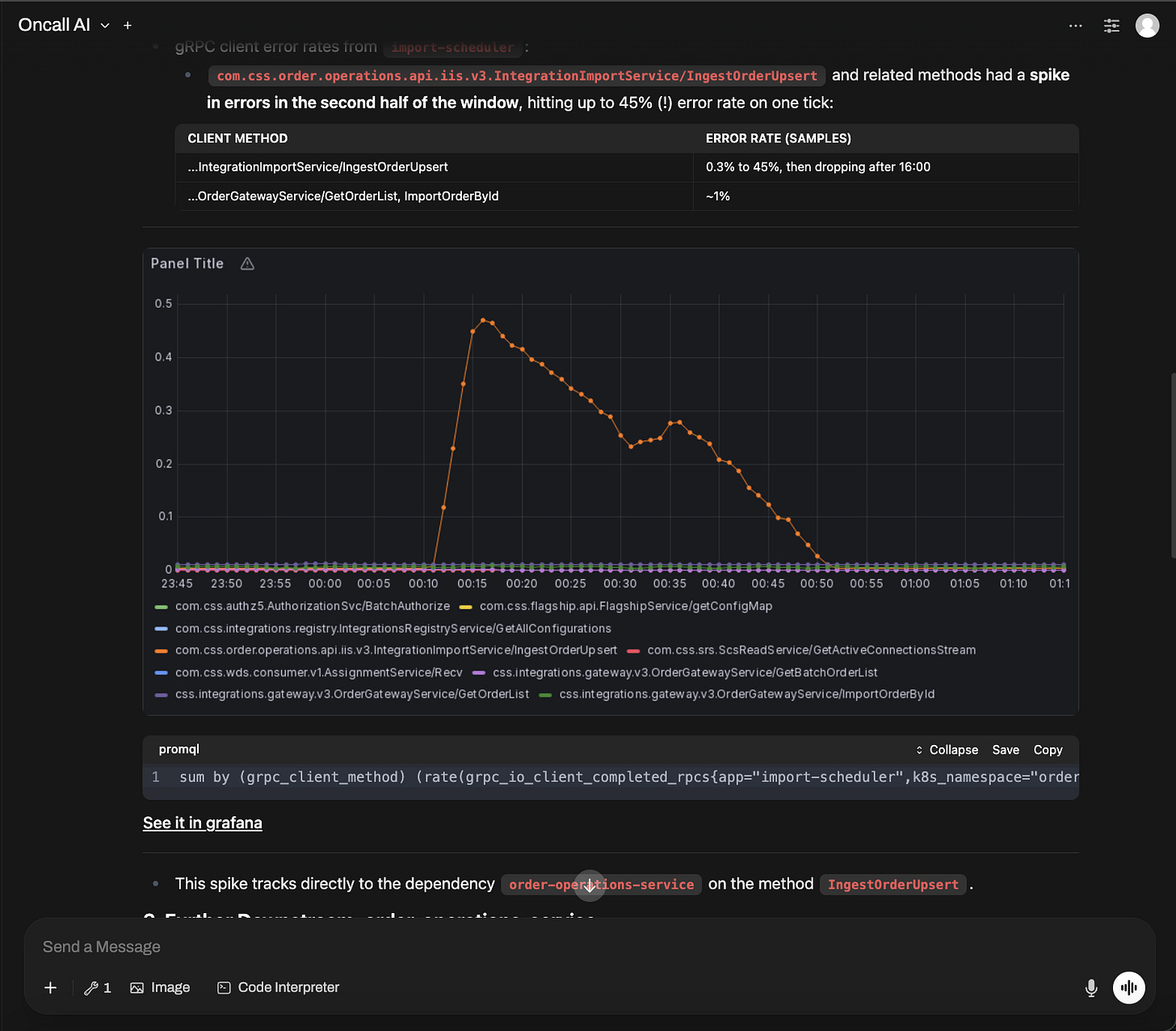

Monorepo Agent

A GenAI tool equipped with MCP servers to understand the entire software development process at CloudKitchens, starting with branch creation, local iteration, PR creation and CI validation.

Our first usage of this tool was on toil-heavy maintenance tasks, such as cleaning up static analysis suppressions that had been lingering in our backlog.

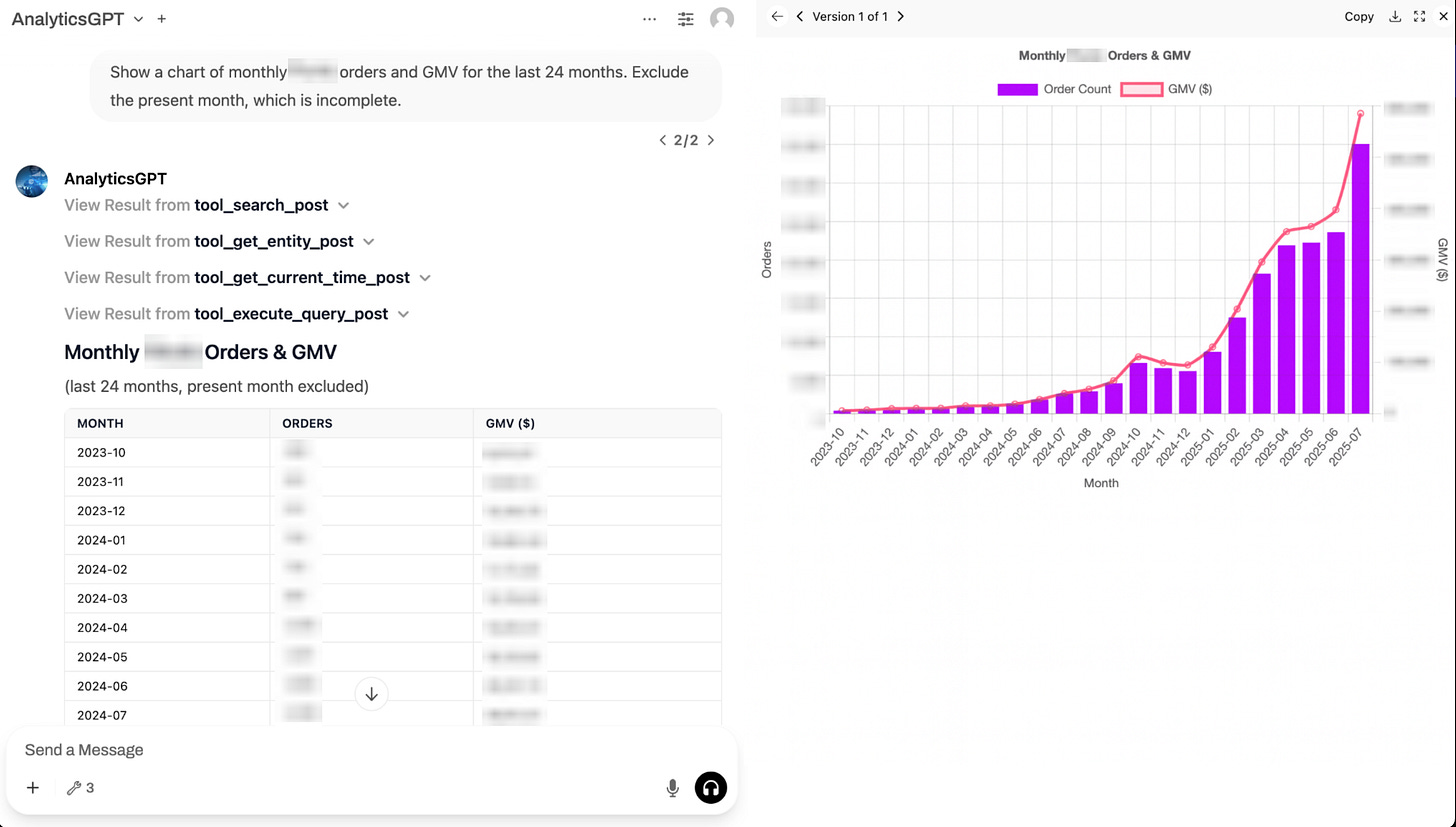

AnalyticsGPT

This is our take on the next generation of data analysts. A tool that’s designed to help answer data queries via natural language while retaining the user’s access controls which shows its chain of thought so you can trust and verify the analysis.

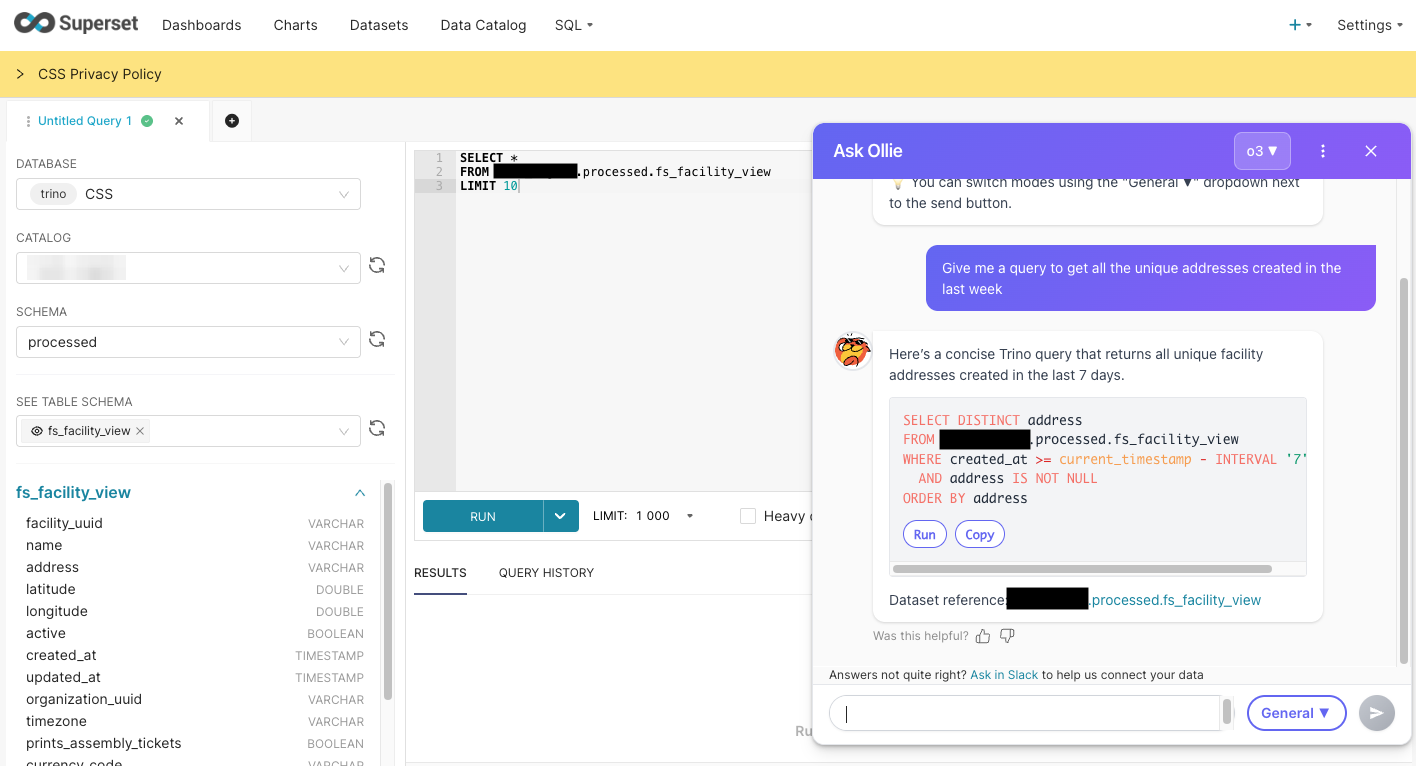

Superset Agent

We find that impact and adoption is organically high when we add targeted features to where developers are already spending a lot of time. An example is our agentic superset assistant that comes baked in with the full knowledge of the dataset and the table schema.

Future DevEx Platform Explorations

From Single Agent to Distributed Execution

As GenAI tools evolve beyond simple code completion, we're seeing a shift toward distributed agent systems that pass state and partial task completion between specialized components. This approach mirrors how software teams coordinate work, but compressed into a single developer's workflow. It’s almost like we’re reinventing how teams collaborate and are all playing the role of an Engineering Manager.

Investing in ephemeral development environments could pay off here to avoid being constrained by local resources.

Investing More in Evals and Observability

Due to the inherent non-determinism that comes with LLMs today, we need to build in mechanisms to know how well an LLM use-case is performing and monitor its quality and adoption over time.

E2E Agentic Campaign Management

Right now, we have the ability to have an agent with an understanding of our end-to-end developer lifecycle, but as always, we are hungry for more.

We want to be able to make it easier to run larger campaigns that require several dependent changes with more granular checkpoint validation. This will allow us to make the leap from smaller one-off changes like fixing specific bugs to doing full-fledged framework migrations - from an individual productivity boost to collaborative workflow with org-wide impact.

One concrete test case we’d like to prove this for is our white-glove migration of ML and DS tech stack’s package manager from Poetry to uv, specifically to validate the claimed performance improvements.

Conclusion

We are very excited about this new technology that makes developers feel like we have superpowers. We’re optimistic about the future, but we’re not seeing the “fall of software engineering” or that “SaaS is dead” like tech social media would have you believe. We’re actually seeing even more reward for good engineering fundamentals.

We’re publishing this blog post at the risk of it being out-of-date within a few weeks (if we’re lucky). But then again, maybe we could just have AI generate the next iteration autonomously. Right?